It’s Time to Make Your AI App Shine Before It’s Yesterday’s News.

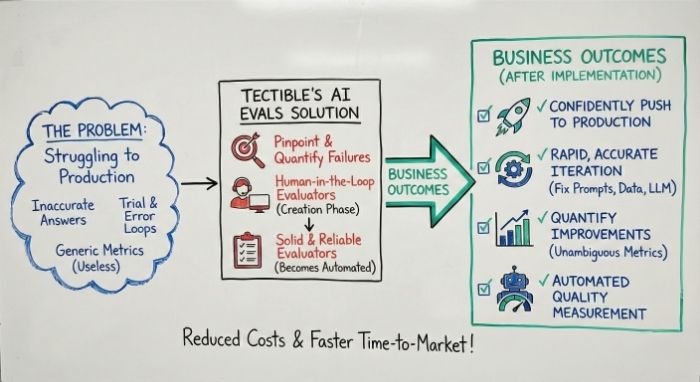

Your demo impressed, but real-world scaling revealed the cracks – just like it does for 80% of AI teams. At Tectible, we specialize in implementing AI evals tailored to your app, drawing from proven error analysis techniques to identify and resolve issues before they derail your launch.

AI development teams can’t fix what they don’t know. Usually, dev teams update prompts and vibe-validate answers coming from the updated prompt. This kind of practice leads to loops of trial and error with no significant improvements in the outputs. AI evaluators are the only way to pinpoint the failures and quantify them.

There are evaluation tools that provide generic scores like “Faithfulness,” “Helpfulness,” and “Tone.” Those generic metrics are useless for your application. Since LLMs generate outputs on a probabilistic basis, having human-in-the-loop to a specific extent is the only way to have solid and reliable evaluators for your AI application. Then, automated evaluators can be put in place to completely avoid inaccurate responses.

Implementing AI evals delivered by Tectible, your AI development team will know exactly where inaccuracies are originating and will be able to:

- Rapidly iterate to accurately fix what needs to be fixed: prompts, data retrieval, or switching to a more capable LLM for your AI-specific tasks.

- Quantify improvements with unambiguous metrics.

- Automatically measure the quality of your AI application with automated evaluators.